In the rapidly evolving world of large language models (LLMs), the quality of an AI’s output is directly proportional to the quality of its input the prompt. While basic prompt engineering focuses on clear instructions, Advanced Prompt Engineering is the art and science of meticulously crafting prompts to unlock sophisticated reasoning, nuanced understanding and precise control over LLM behavior. It’s the key to transforming generic AI responses into truly intelligent and valuable interactions.

Beyond Basic Prompts: What is Advanced Prompt Engineering?

Advanced prompt engineering moves beyond simply telling an LLM what to do. It involves strategically designing prompts that:

- Guide Reasoning: Encourage the LLM to “think” in a structured way.

- Provide Context and Examples: Give the LLM enough information to perform complex tasks accurately.

- Control Output Format: Ensure the LLM generates responses in a desired structure (e.g., JSON, tables).

- Manage Complexity: Break down intricate problems into manageable steps for the LLM.

This specialized skill enables developers and users to harness the full potential of powerful LLMs for highly specific and demanding applications.

Why Advanced Prompt Engineering Matters

Mastering advanced prompt engineering techniques offers significant benefits:

- Unlocking Complex Reasoning: Enables LLMs to tackle multi-step problems, logical puzzles and creative tasks that go beyond simple retrieval.

- Reducing Hallucinations and Bias: By providing explicit context and guiding the reasoning process, it helps ground LLM responses in facts and reduce fabricated information.

- Improving Accuracy and Reliability: Leads to more consistent, relevant, and correct outputs.

- Tailoring LLM Behavior: Allows for precise control over the model’s tone, persona, and output style, making it suitable for diverse applications.

- Optimizing Resource Usage: Better prompts mean fewer retries and more efficient use of LLM tokens, potentially reducing operational costs.

Key Advanced Prompt Engineering Techniques

Several powerful techniques are at the forefront of advanced prompt engineering:

- Few-Shot / In-Context Learning:

- Concept: Provide a few high-quality input-output examples directly within the prompt before asking the actual question. The LLM then infers the pattern and applies it to the new input.

- Elaboration: This pattern teaches the model by demonstration, allowing it to adapt to specific styles or tasks without extensive fine-tuning. It’s particularly useful when you need the LLM to follow a particular format or logic.

- Chain-of-Thought (CoT) Prompting:

- Concept: Instruct the LLM to articulate its reasoning process step-by-step before providing the final answer. This can be done by simply adding “Let’s think step by step” or providing examples of reasoning.

- Elaboration: CoT helps the LLM break down complex problems into smaller, more manageable parts, improving its ability to handle multi-step reasoning, arithmetic, and common sense tasks.

- Self-Consistency:

- Concept: Generate multiple CoT responses for the same query by prompting the LLM several times, then select the most consistent or frequently occurring answer.

- Elaboration: This technique leverages diverse reasoning paths to enhance the accuracy and reliability of answers, mitigating the impact of individual reasoning errors.

- Role-Playing / Persona Prompting:

- Concept: Instruct the LLM to adopt a specific persona (e.g., “You are an expert scientist,” “Act as a helpful customer service agent”) or align its responses to a specific audience (e.g., “Explain this to a 5-year-old”).

- Elaboration: The Persona Pattern guides the LLM’s tone and knowledge base, while the Audience Persona Pattern tailors the complexity and style of the output to suit the intended recipient.

- ReAct Pattern:

- Concept: Combines “Reasoning” and “Acting.” The LLM generates a reasoning trace (CoT) and then an action plan to interact with external tools or environments.

- Elaboration: This pattern allows LLMs to dynamically retrieve information, perform calculations, or interact with APIs, making them capable of solving complex, real-world problems beyond their internal knowledge.

- Question Refinement Pattern:

- Concept: Prompt the LLM to refine or ask clarifying questions if the initial query is ambiguous or insufficient.

- Elaboration: This improves the quality of the final response by ensuring the LLM fully understands the user’s intent, leading to more accurate and relevant outputs.

- Cognitive Verifier Pattern:

- Concept: Instruct the LLM to first generate an answer and then critically evaluate or verify its own answer against certain criteria or by generating alternative explanations.

- Elaboration: This technique enhances reliability by prompting the LLM for self-correction and validation, mimicking a human’s critical thinking process.

- Flipped Interaction Pattern:

- Concept: Instead of the user always asking questions, prompt the LLM to ask the user questions to gather necessary information or explore a topic collaboratively.

- Elaboration: This pattern creates a more engaging and efficient dialogue, allowing the LLM to take the lead in information gathering.

- Gameplay Pattern (Gameplay Pattern):

- Concept: Frame the interaction as a game or a scenario with rules, roles, and objectives for the LLM to follow.

- Elaboration: This can be used to create creative or engaging experiences, or to guide the LLM’s behavior within specific simulated environments.

- Template Prompt:

- Concept: Use predefined structures or placeholders in your prompt that the LLM needs to fill in, ensuring a consistent output format.

- Elaboration: This is crucial for applications requiring structured data extraction or consistent content generation, streamlining downstream processing.

- Meta Language Creation Pattern:

- Concept: Define a specific, custom language or set of symbols within your prompt that the LLM should understand and use for its internal reasoning or output.

- Elaboration: This allows for highly precise control over the LLM’s internal logic and output structure, enabling complex rule-based interactions.

- Recipe Pattern:

- Concept: Provide the LLM with a step-by-step “recipe” or a set of sequential instructions to follow to achieve a desired outcome.

- Elaboration: Useful for complex tasks that can be broken down into discrete, ordered steps, ensuring the LLM follows a logical progression.

- Alternative Approaches Pattern:

- Concept: Ask the LLM to generate multiple distinct solutions or perspectives to a given problem.

- Elaboration: This encourages diverse thinking and provides a broader range of options, especially valuable for creative problem-solving or decision support.

- Ask for Input Pattern:

- Concept: Prompt the LLM to explicitly request additional information from the user when it determines it needs more context to provide a complete or accurate answer.

- Elaboration: This helps manage ambiguity and ensures the LLM provides the most relevant response by proactively seeking necessary details.

- Expansion Pattern:

- Concept: Instruct the LLM to elaborate on a given short input, expanding it into a more detailed explanation, narrative, or analysis.

- Elaboration: Ideal for content creation tasks where you need to generate longer-form text from concise ideas or keywords.

- Menu Actions Pattern:

- Concept: Present the LLM with a list of predefined actions or options and instruct it to choose or perform one based on the user’s intent.

- Elaboration: This guides the LLM within a constrained set of functionalities, common in conversational AI for directing user interactions.

- Checklist Pattern:

- Concept: Provide the LLM with a checklist of items or criteria to verify, fulfill, or include in its response.

- Elaboration: Ensures completeness and accuracy by guiding the LLM to systematically address all required elements in its output.

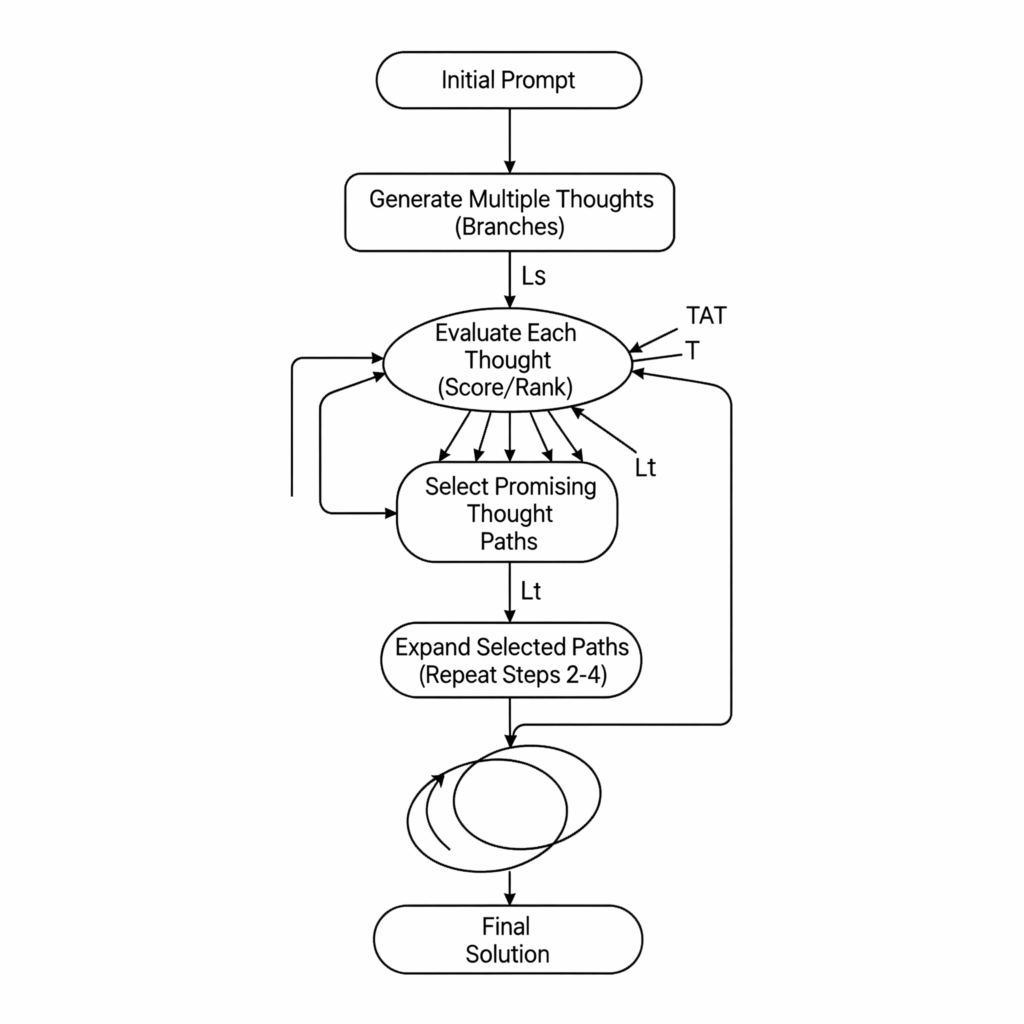

- Tree of Thought Prompting:

- Concept: An advanced reasoning technique where the LLM explores multiple coherent thought processes, branching out and backtracking like nodes in a tree structure.

- Elaboration: This allows the LLM to consider various solution paths, evaluate their potential, and select the most promising one, leading to more robust and accurate complex problem-solving.

The Art and Science of Prompt Engineering

Advanced prompt engineering is a dynamic field that combines linguistic intuition with iterative experimentation. It’s an ongoing process of refining instructions, providing precise context, and leveraging sophisticated techniques to unlock the full potential of LLMs. As AI models continue to evolve, mastering advanced prompt engineering will remain an indispensable skill for anyone building and deploying intelligent AI applications.