When we think about artificial intelligence understanding language, often complex neural network architectures come to mind. But what if we could capture some of the essence of language understanding with simpler building blocks? Enter N Grams. These sequences of N consecutive words can surprisingly form the basis of rudimentary neural networks built with the flexibility of PyTorch. Let’s explore this fascinating intersection of traditional language modeling and modern deep learning.

Understanding N Grams: A Foundation in Language

Before diving into neural networks, let’s clarify what N Grams are. Imagine you have a sentence: “The quick brown fox”.

- A unigram (1-gram) would be: “The”, “quick”, “brown”, “fox”.

- A bigram (2-gram) would be: “The quick”, “quick brown”, “brown fox”.

- A trigram (3-gram) would be: “The quick brown”, “quick brown fox”.

Essentially, an N gram is a contiguous sequence of N items from a given sample of text or speech. They capture local word order and some level of short range context within language.

Representing N Grams as Neural Network Inputs

Now, how can we use these N Grams within a neural network in PyTorch? One straightforward approach is to represent each unique N Gram in our vocabulary as a numerical vector. A common method is one hot encoding. For example, if our vocabulary of bigrams includes “the quick”, “quick brown”, and “brown fox”, each would get a unique vector where all elements are zero except for a single one corresponding to that specific bigram.

These one hot encoded N Gram vectors can then serve as the input layer of a simple neural network. Think of it as the network receiving a signal indicating the presence of a particular sequence of words.

Building a Simple N Gram Neural Network in PyTorch

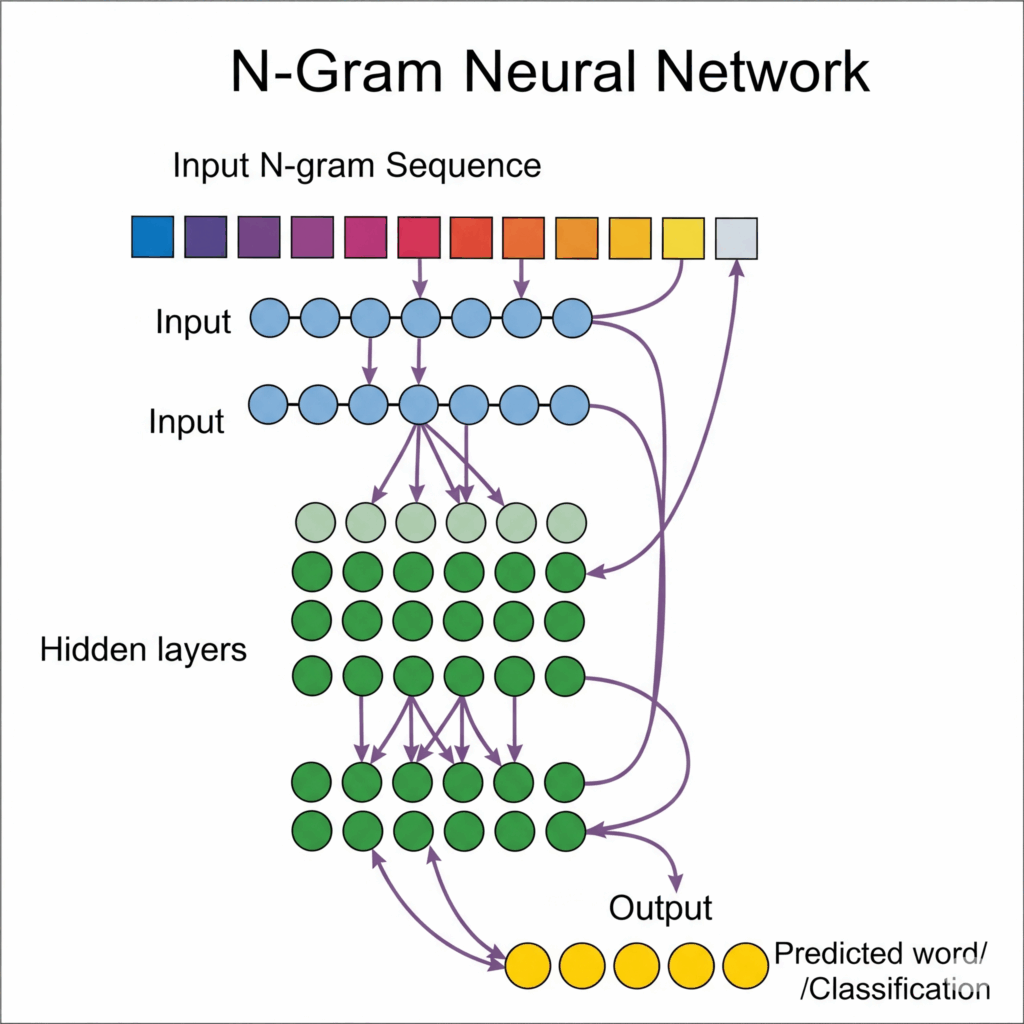

With PyTorch, building such a network is quite intuitive. You might have an input layer whose size matches the total number of unique N Grams in your vocabulary. This could be followed by one or more hidden layers and an output layer depending on the task.

For instance, you could train a network to predict the next word given an N Gram. The input would be the one hot encoded vector of the N Gram, and the output layer could have a size equal to the total vocabulary of individual words, with the activations representing the probability of each word being the next one.

Training Your N Gram Network

The training process in PyTorch for this type of network would follow the standard workflow. You would define a loss function, such as cross entropy loss, to measure the difference between the predicted probabilities and the actual next word. An optimizer, like Adam or SGD, would then be used to update the network’s weights based on the calculated gradients during backpropagation. You would iterate through your text data in epochs, feeding N Grams as input and training the network to minimize the prediction error.

Why Consider N Gram Neural Networks?

While they might not capture the long range dependencies that more complex architectures like Recurrent Neural Networks (RNNs) or Transformers excel at, N Gram based neural networks offer several advantages:

- Simplicity: They are conceptually easier to understand and implement.

- Computational Efficiency: For smaller N values and vocabularies, they can be less computationally demanding to train compared to deeper models.

- Capturing Local Context: They effectively capture immediate word relationships and patterns.

In conclusion, exploring N Grams as neural networks with PyTorch provides a valuable insight into the fundamentals of language modeling and the flexibility of neural network architectures. While modern NLP often leans towards more sophisticated models, understanding these simpler approaches can provide a solid foundation and offer a practical way to model local language context using the power of PyTorch.