Natural Language Processing (NLP) has long focused on enabling machines to understand and interpret human language. However, the advent of Generative AI has revolutionized this field, moving beyond mere comprehension to allowing machines to actively create compelling and coherent text. This fusion has unlocked unprecedented capabilities in text generation, transforming how we interact with and utilize artificial intelligence.

The Rise of Generative AI in NLP

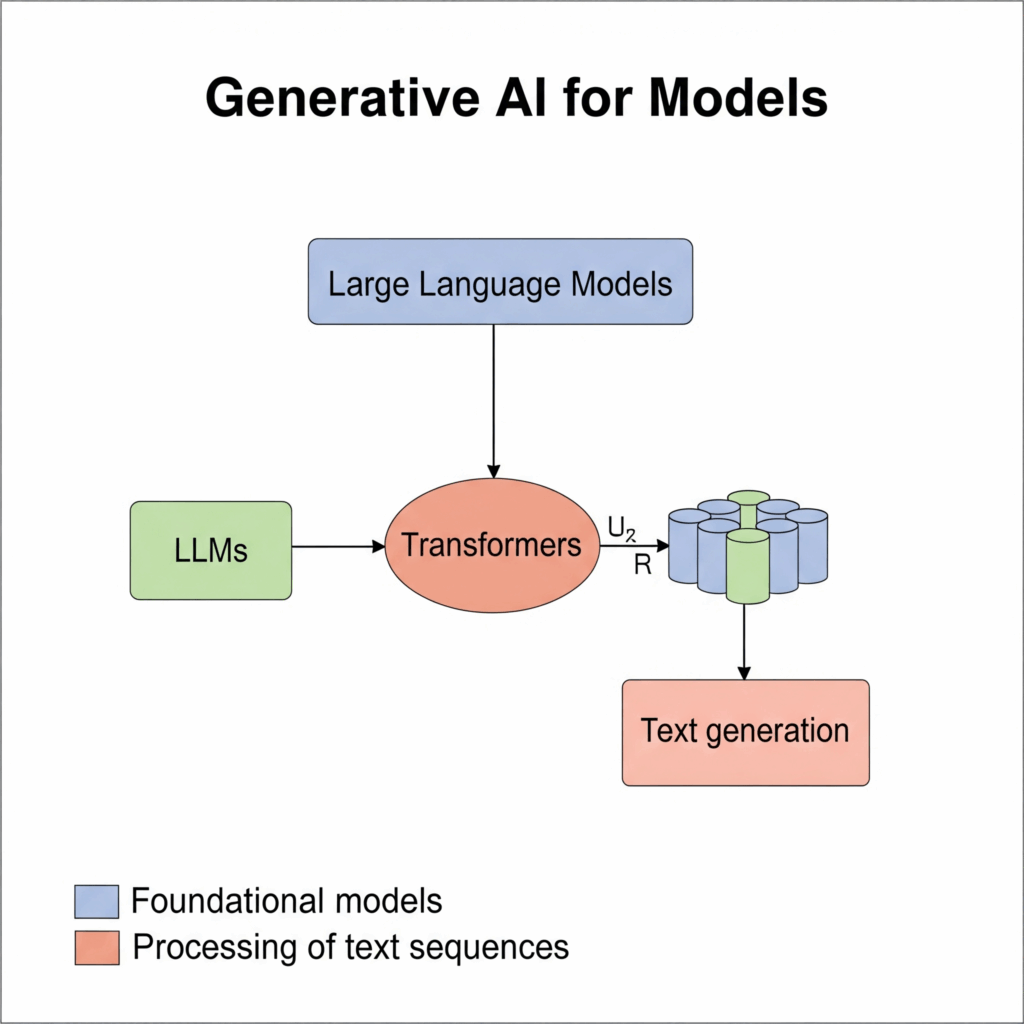

Historically, NLP tasks often involved discriminative models for classification (e.g., sentiment analysis, spam detection) or extraction (e.g., named entity recognition). While powerful, these models did not generate new content. The turning point for generative capabilities in NLP largely came with the widespread adoption of the Transformer architecture. Its ability to model long range dependencies in text, combined with massive scale pretraining on vast internet corpora, laid the foundation for modern Large Language Models (LLMs) that can produce highly fluent and coherent human like text.

Large Language Models (LLMs)

Large Language Models are the undisputed powerhouse of generative NLP. Models like OpenAI’s GPT series, Google’s Gemini, and Meta’s Llama are all fundamentally generative. They operate on an auto regressive principle, meaning they predict the next token (word or subword) in a sequence based on all the preceding tokens.

This iterative prediction allows LLMs to “write” text by extending a given prompt. Their immense pretraining enables them to capture vast amounts of linguistic knowledge, facts, and even styles, making them capable of diverse text generation tasks, from answering questions to drafting entire articles.

Encoder Decoder Transformers (for Seq2Seq Tasks)

While pure LLMs are excellent at open ended text generation, other generative architectures derived from the Transformer are specifically designed for “sequence to sequence” (Seq2Seq) tasks. Models like T5 or BART utilize an encoder decoder structure:

- The Encoder processes the input sequence (e.g., a source language sentence or a long document).

- The Decoder then generates the output sequence (e.g., the translated sentence or a concise summary) based on the encoder’s representation.

This architecture is particularly effective for tasks requiring a transformation from one text sequence to another, such as machine translation and text summarization.

Chatbots and Conversational AI

One of the most impactful applications of generative AI in NLP is the development of advanced chatbots and conversational AI. Gone are the days of rigid, rule based chatbots. Modern conversational agents, powered by generative LLMs, can engage in fluid, natural dialogues, understand context, and generate dynamic responses that were not explicitly programmed. This capability has revolutionized customer service, virtual assistants, and interactive user experiences.

How Generative AI Transforms NLP Tasks

Generative AI has fundamentally reshaped numerous NLP applications:

- Content Creation: Automating the drafting of articles, marketing copy, social media posts, email campaigns, and even story generation or poetry.

- Machine Translation: Producing more nuanced and contextually aware translations, bridging language barriers with increased fluency.

- Text Summarization: Generating abstractive summaries that synthesize information, rather than just extracting sentences.

- Question Answering: Providing direct, generated answers to complex questions, unlike extractive QA systems that just find relevant passages.

- Synthetic Data Generation: Creating diverse and realistic text data for training other machine learning models, especially useful when real data is scarce or sensitive.

- Prompt Engineering: A new skill focused on crafting effective inputs to guide generative models to produce desired outputs, highlighting the human in the loop.

Beyond the Hype: Challenges and Ethical Considerations

Despite its remarkable capabilities, generative AI in NLP also presents challenges. Issues like factual inaccuracies (hallucinations), the propagation of biases present in training data, and the potential for misuse (e.g., generating misinformation) require careful attention. Ethical considerations are paramount in the ongoing development and deployment of these powerful systems, emphasizing the need for robust evaluation and responsible use.

Conclusion

Generative AI has ushered in a new era for Natural Language Processing, moving beyond mere analysis to true creation. From the vast Large Language Models that power everyday conversational AI to specialized encoder decoder architectures, these models are continually pushing the boundaries of what machines can achieve with human language, promising a future of richer, more dynamic human computer interaction.