For a long time, Artificial Intelligence was primarily associated with analysis: classifying data, making predictions, or recognizing patterns. However, a revolutionary shift has occurred with the rise of Generative AI. This exciting field is all about teaching machines to create new, original content, unleashing unprecedented levels of digital creativity across various domains.

What is Generative AI?

At its core, Generative AI refers to intelligent systems that learn the underlying patterns and structures within vast amounts of training data to produce novel outputs. Unlike discriminative models that predict labels or categories for existing data, generative models synthesize entirely new data that resembles the data they were trained on. This could be anything from photorealistic image generation and compelling text generation to music, video, or even synthetic data generation.

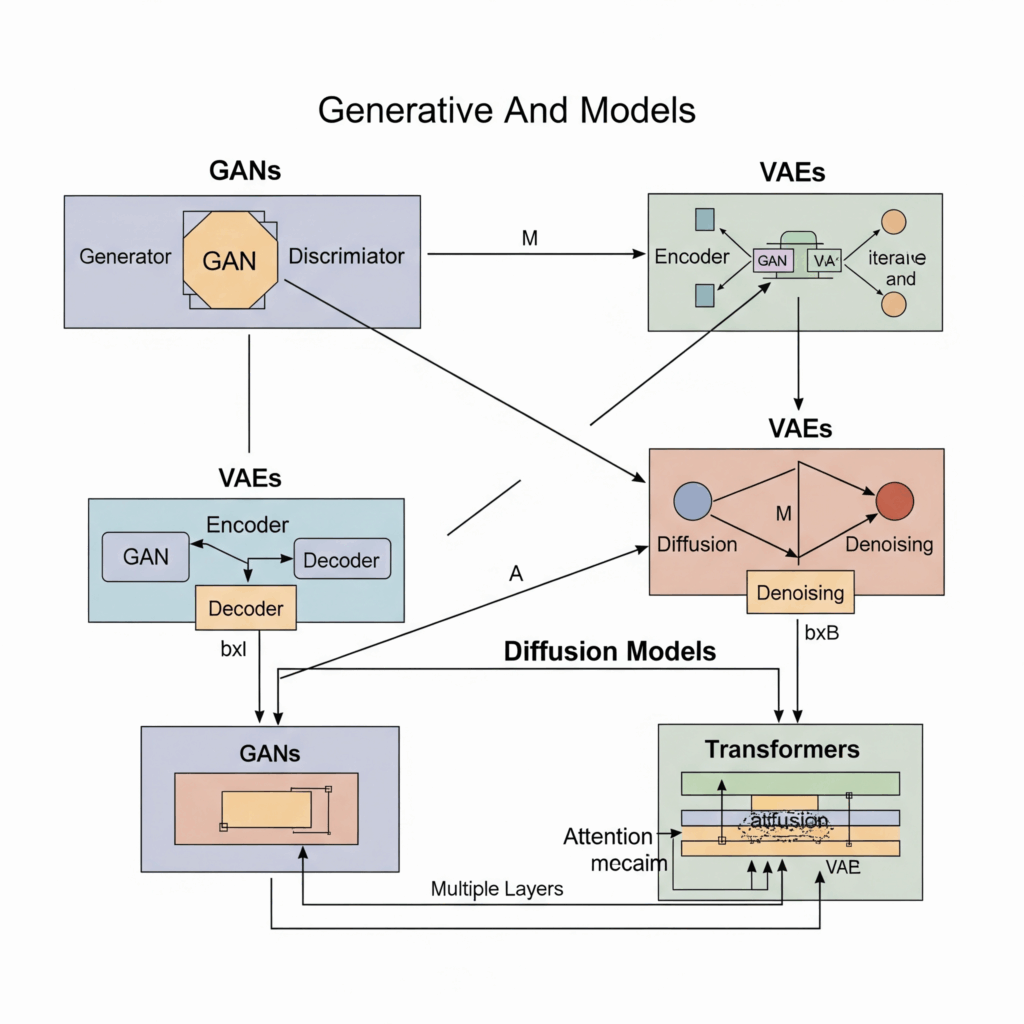

Generative Adversarial Networks (GANs)

Introduced in 2014, GANs revolutionized generative modeling with their ingenious two part structure. They consist of two competing neural networks:

- The Generator: This network takes random noise as input and tries to create new data (e.g., an image) that looks real.

- The Discriminator: This network acts as a critic. It receives both real data from the training data set and fake data from the Generator, and its job is to distinguish between the two.

This adversarial training process is like an art forger (Generator) trying to fool an art critic (Discriminator). Over time, the Generator learns to produce increasingly realistic outputs, while the Discriminator becomes better at detecting fakes, leading to highly convincing image generation.

Variational Autoencoders (VAEs)

VAEs take a different, more probabilistic approach. They are composed of two main parts:

- The Encoder: This network takes input data and maps it to a compressed, probabilistic representation in a latent space. Instead of a single point, it outputs parameters (like mean and variance) of a probability distribution.

- The Decoder: This network takes a sample from the latent space distribution and tries to reconstruct the original input.

VAEs are excellent for learning the underlying probability distribution of the data and can generate new samples by sampling from this learned latent space. They are known for smooth interpolations and controlled generation.

Diffusion Models

A newer and incredibly powerful class of generative models, Diffusion Models have recently achieved state of the art results, particularly in image generation. Their core idea is fascinating:

- Forward Diffusion: Gradually adding noise to an image until it becomes pure random noise.

- Reverse Diffusion (Denoising): Learning to reverse this process, step by step, by predicting and removing the noise at each stage to transform pure noise back into a coherent image.

Models like Stable Diffusion, DALL-E, and Midjourney are built upon this principle, producing remarkably high quality and diverse outputs.

Transformers (for Text Generation)

While the Transformer architecture is famous for its role in understanding language, it is also a cornerstone of text generation. Large language models (LLMs) like OpenAI’s GPT series are fundamentally generative Transformers. They learn to predict the next word or token in a sequence based on the preceding context. By iteratively predicting tokens, these models can generate lengthy, coherent, and contextually relevant text generation, from articles and stories to code and conversational responses.

The Magic Behind the Creation

At their heart, all these generative models learn the complex probability distribution of their training data through unsupervised learning. They internalize the statistical relationships and patterns. Once trained, they can be prompted to sample from this learned distribution, resulting in entirely novel outputs that were not present in the original training set but adhere to its characteristics.

Impact and Future

Generative AI is rapidly transforming industries, empowering artists, designers, writers, and developers with unprecedented tools. From automating content creation to enabling novel product designs, these models are redefining the boundaries of what machine learning can achieve, pushing us into a new era of AI powered creativity.