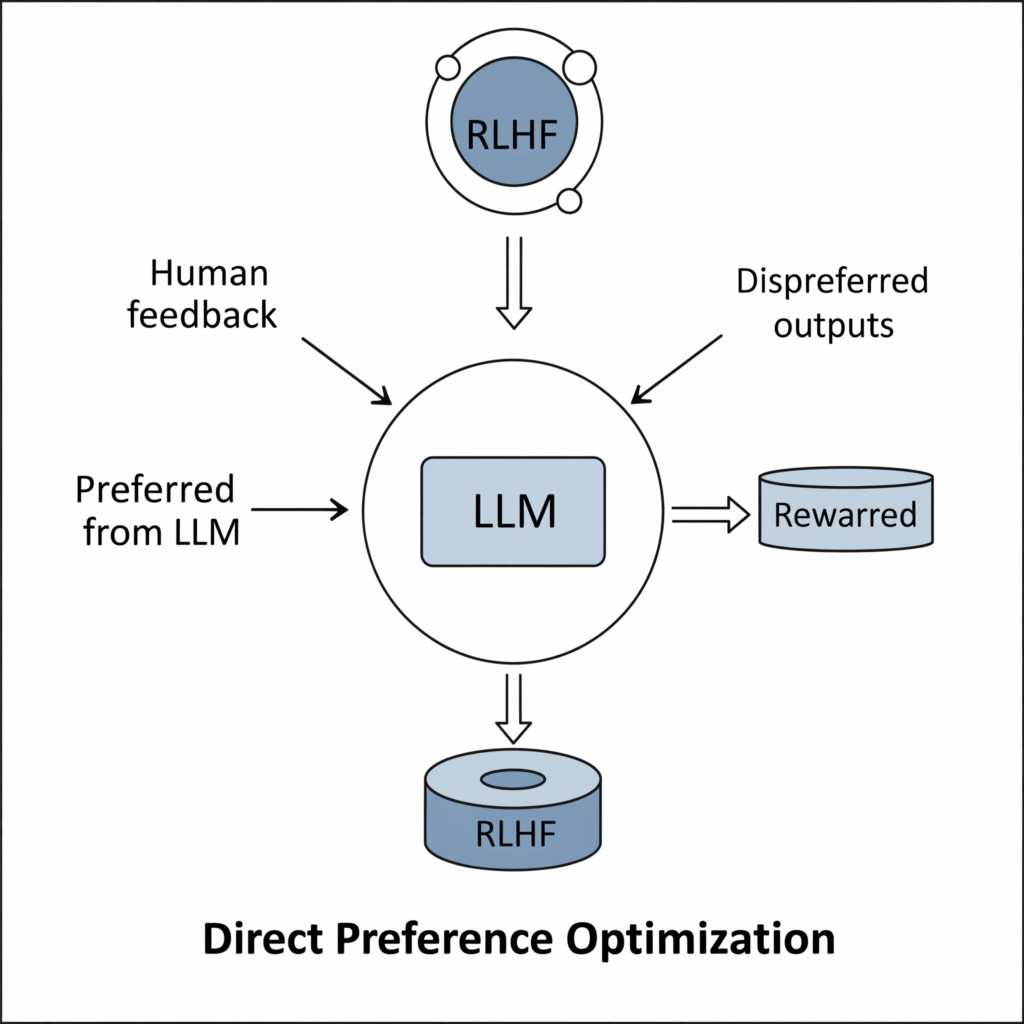

Aligning large language models (LLMs) with complex human values is a grand challenge in artificial intelligence. Traditional approaches like Reinforcement Learning from Human Feedback (RLHF) have proven effective, but they often involve multi step processes that can be computationally intensive and difficult to stabilize. Enter Direct Preference Optimization (DPO), a revolutionary method that provides an optimal solution for alignment by directly fine tuning LLMs based on human preferences, bypassing much of the complexity of its predecessors.

The Challenge of RLHF and the Rise of DPO

Standard RLHF typically involves two main phases: first, training a separate reward model to quantify human preferences, and then using a reinforcement learning algorithm (like PPO) to update the policy model (the LLM) to maximize these learned rewards. While powerful, this two step approach can introduce instability, require significant hyperparameter tuning, and demands substantial computational resources.

DPO (Direct Preference Optimization) emerged as a more elegant and direct alternative. It reframes the alignment problem into a simpler, more stable supervised learning objective, directly optimizing the policy based on chosen and rejected responses.

DPO: Directly Optimizing Human Preferences

The core brilliance of DPO lies in its simplicity. Instead of explicitly training a reward model, DPO directly works with human preferences data (pairs of a preferred response and a rejected response for a given prompt). It leverages a clever mathematical derivation to create a loss function that guides the policy model to directly increase the likelihood of generating preferred responses while decreasing the likelihood of rejected ones.

This direct optimization avoids the need for an additional neural network (the reward model) and the complexities of reinforcement learning algorithms. The policy is updated simply by performing gradient descent on the DPO loss, making the entire alignment process much more straightforward and robust.

The DPO Optimal Solution: What It Aims For

DPO’s name hints at its objective: it aims to find the optimal solution for the policy model that best reflects the underlying human preferences. The key insight from the DPO paper (“Direct Preference Optimization: Your Language Model is Secretly a Reward Model”) is that there is a direct, closed form relationship between an optimal reward function and the optimal policy. DPO’s loss function is derived to directly optimize the policy towards this implicit optimal reward, without ever needing to estimate the reward function itself.

If a response yw is preferred over yl for a given prompt x, DPO’s objective pushes the policy to assign a higher log probability to yw relative to a reference model (often the original, unaligned LLM) compared to yl. This relative scoring is crucial for alignment.

Unpacking the DPO Partition Function

While the full mathematical derivation is complex, the concept of the partition function is key to understanding DPO’s theoretical foundation. In statistical mechanics, a partition function (often denoted as Z) normalizes probabilities over all possible states. In DPO’s context, the partition function naturally arises in the mathematical linkage between the log ratio of a policy’s probabilities and the underlying reward function.

The beauty of DPO’s formulation is that when comparing chosen and rejected responses, the partition function terms cancel out in the final loss function. This cancellation is what allows DPO to directly optimize the policy using a simple cross entropy like objective, without needing to compute or estimate the partition function explicitly during training. It ensures that the model learns the relative preferences correctly and efficiently.

Benefits and Simplicity with PyTorch

The practical advantages of DPO are substantial:

- Simplicity: No separate reward model training, no complex reinforcement learning loop.

- Stability: Inherently more stable than RL algorithms.

- Efficiency: Faster training, often requiring less computational power.

- Robustness: Less sensitive to hyperparameter choices.

These benefits make DPO an incredibly attractive method for fine tuning large language models in PyTorch. Its straightforward loss function can be readily implemented, directly updating the LLM’s weights based on valuable training data of human preferences.

Conclusion

Direct Preference Optimization represents a significant step forward in LLM alignment. By providing a direct, stable, and computationally efficient way to incorporate human preferences, DPO offers an optimal solution that simplifies the journey from powerful pretrained models to truly helpful and aligned artificial intelligence.